When I was a kid, my grandpa would take us to the bay to go crabbing. We’d throw a rickety folding cage with bait into the water, and we’d wait an indeterminate amount of time for the (inevitably irate) crustaceans to make their way in.

What does this have to do with data extraction? Well, getting useful morsels of data from a vast ocean of information isn’t unlike crabbing, minus (usually) the maimed cuticles. You need a tool—albeit a much more sophisticated one than a rickety old cage—that can sift through the depths for you, so you can pluck out what you need when you’re ready. Effective, automated data extraction allows you to do just that.

Table of contents:

Quick review: What is data extraction?

Data extraction is the pulling of usable, targeted information from larger, unconsolidated sources. You start with massive, unstructured logs of data like emails, social media posts, and audio recordings. Then a data extraction tool identifies and pulls out specific information you want: things like usage habits, user demographics, financial numbers, and contact information.

Once pulled out like crabs from the bay, you can cook that data into actionable resources like targeted leads, ROIs, margin calculations, operating costs, and much more.

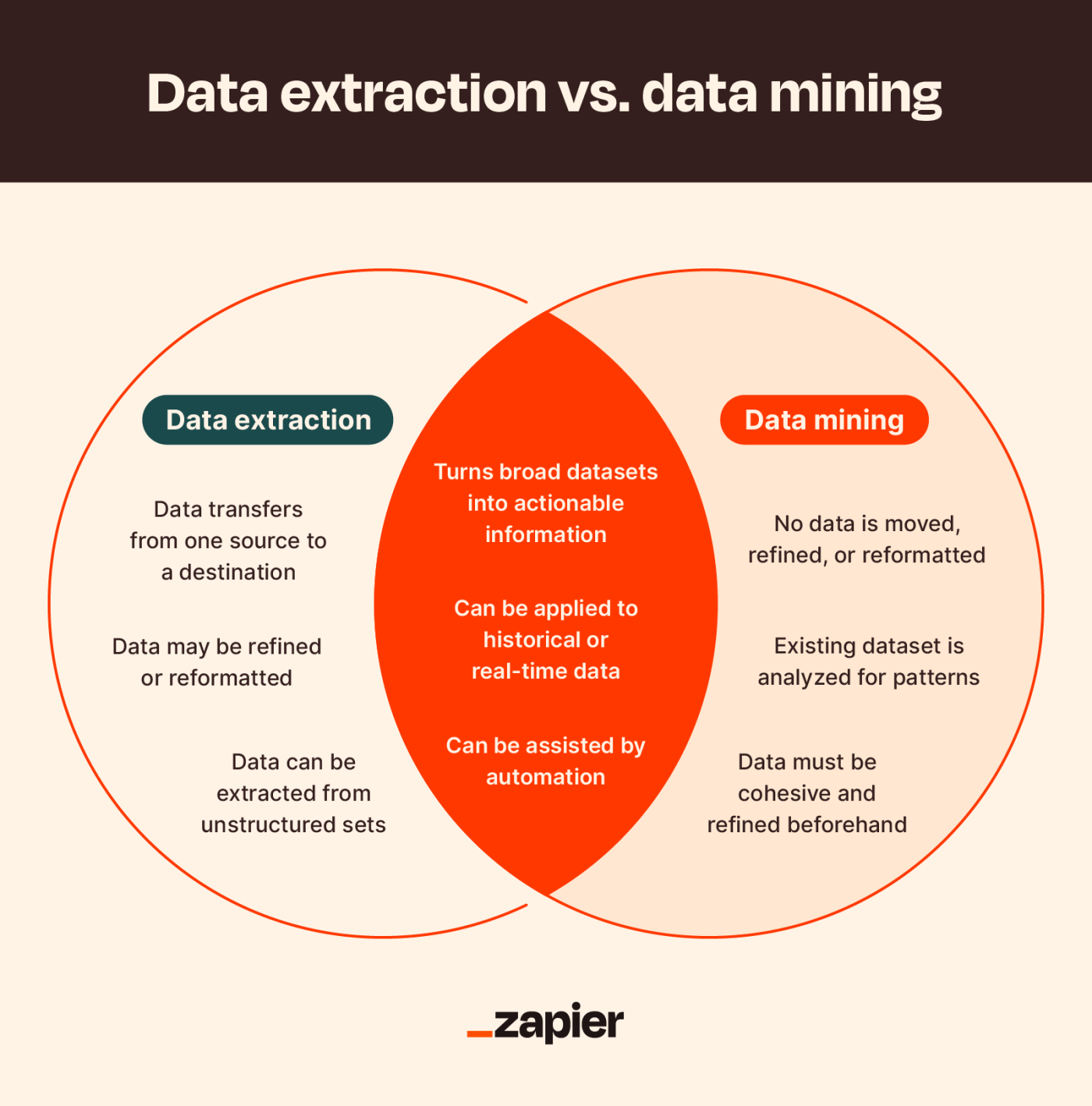

Data extraction vs. data mining

Both data extraction and data mining turn sprawling data sets into information you can use. But while mining simply organizes the chaos into a clearer picture, extraction provides blocks you can build into various analytical structures.

Data extraction example

Data extraction draws specific information from broad databases to be stored and refined.

Let’s say you’ve got several hundred user-submitted PDFs. You’d like to start logging user data from those PDFs in Excel. You could manually open each one and update the spreadsheet yourself, but you’d rather pour Old Bay on an open wound.

So, you use a data extraction tool to automatically crawl through those files instead and log specified keyword values. The tool then updates your spreadsheet while you go on doing literally anything else.

Data mining example

Data mining, on the other hand, identifies patterns within existing data.

Let’s say your eCommerce shop processes thousands of sales across hundreds of items every month. Using data mining software to assess your month-over-month sales reports, you can see that sales of certain products peak around Valentine’s Day and Christmas. You ramp up timely marketing efforts and make plans to run holiday sales a month in advance.

Structured vs. unstructured data

Structured data has consistent formatting parameters that make it easily searchable and crawlable, while unstructured data is less defined and harder to search or crawl. This binary might trigger Type-A judgment that structured is always preferable to unstructured, but each has a role to play in business intelligence.

Structured data

Think of structured data like a collection of figures that abide by the same value guidelines. This consistency makes them simple to categorize, search, reorder, or apply a hierarchy to. Structured datasets can also be easy to automate for logging or reporting since they’re in the same format.

Examples of structured data include:

-

Spreadsheets

-

Text files

-

SQL databases

-

Webforms

-

Timelogs

Unstructured data

Unstructured data is less definite than structured data, making it tougher to crawl, search, or apply values and hierarchies to. The term “unstructured” is a little misleading in that this data does have its own structure—it’s just amorphous. Using unstructured data often requires additional categorization like keyword tagging and metadata, which can be assisted by machine learning.

Examples of unstructured data include:

-

Social media posts

-

Emails

-

Photo and video files

-

Websites

-

Audio recordings

How to extract data

There are two data extraction methods: incremental and full. Like structured and unstructured data, one isn’t universally superior to the other, and both can be vital parts of your quest for business intelligence.

Incremental extraction

Incremental extraction applies complex logic to account for changes in datasets. You could use incremental extraction to monitor shifting data, like changes to inventory since the last extraction. Identifying these changes requires the dataset to have timestamps or a change data capture (CDC) mechanism.

To continue the crabbing metaphor, incremental extraction is like using a baited line that goes taut whenever there’s a crab on the end—you only pull it when there’s a signaled change to the apparatus.

Full extraction

Full extraction indiscriminately pulls data from a source at once. This is useful if you want to create a baseline of information or an initial dataset to further refine later. If the data source has a mechanism for automatically notifying or updating changes after extraction, you may not need incremental extraction.

Full extraction is like tossing a huge net into the water then yanking it up. Sure, you might get a crab or two, but you’ll also get a bunch of other stuff to sift through.

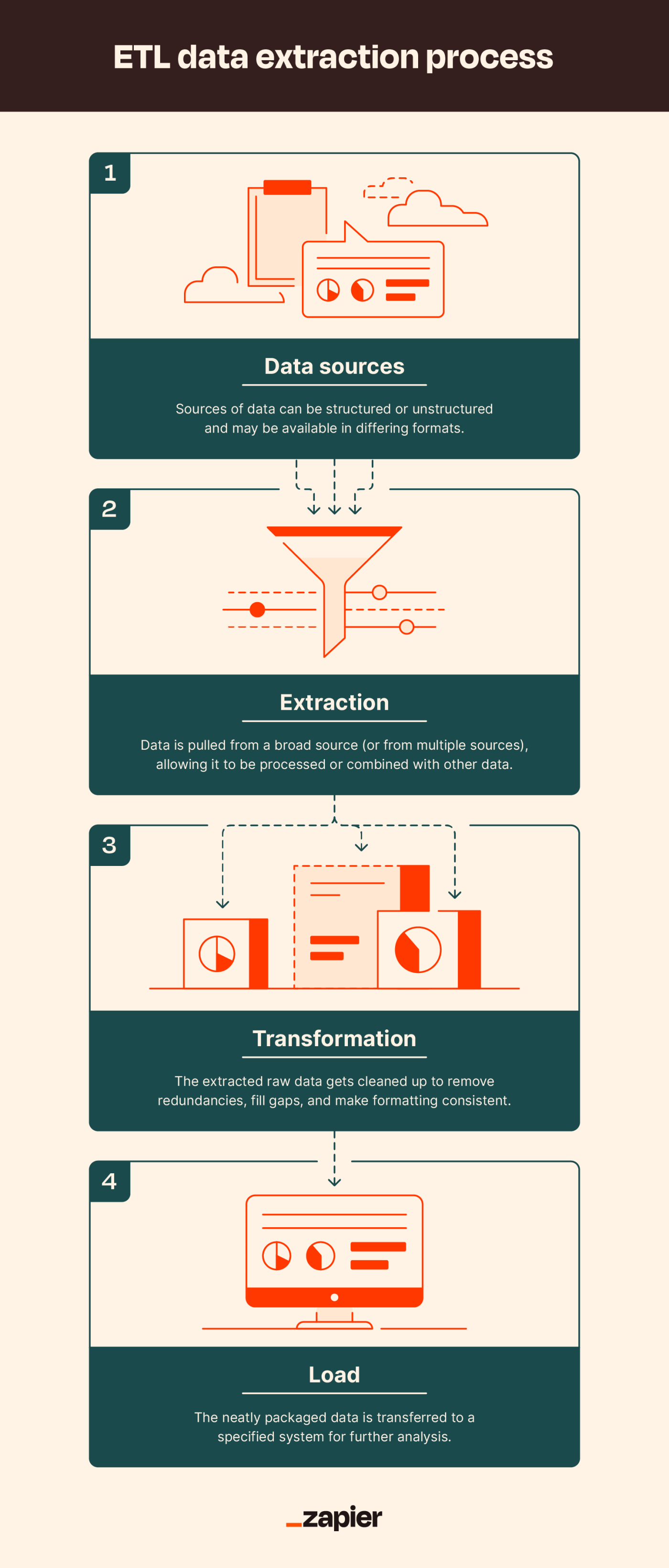

The data extraction process

Data extraction is the foundation (AKA, the “E”) of the business intelligence acquisition process ETL: extract, transform, load. (You may have heard it as ELT, but the basic functions are still the same in either case.)

-

Extract: Data is pulled from a broad source (or from multiple sources), allowing it to be processed or combined with other data.

-

Transform: The extracted raw data gets cleaned up to remove redundancies, fill gaps, and make formatting consistent.

-

Load: The neatly packaged data is transferred to a specified system for further analysis.

Types of data extraction tools

Data extraction tools fall into four categories: cloud-based, batch processing, on-premise, and open-source. These types aren’t all mutually exclusive, so some tools may tick a few (or even all) of these boxes.

-

Cloud-based tools: These scalable web-based solutions allow you to crawl websites, pull online data, and then access it through a platform, download it in your preferred file type, or transfer it to your own database.

-

Batch processing tools: If you’re looking to move massive amounts of data at once—especially if not all of that data is in consistent or current formats—batch processing tools can help by conveniently extracting in (you guessed it) batches.

-

On-premise tools: Data can be harvested as it arrives, which can then be automatically validated, formatted, and transferred to your preferred location.

-

Open-source tools: Need to extract data on a budget? Look for open-source options, which can be more affordable and accessible for smaller operations.

Benefits of automated data extraction tools

When making decisions, devising campaigns, or scaling, you can never have too much information. But you do need to whittle down that information into digestible bits. And like all practices, data extraction is better with automation—and not just because it saves you (or an intern) the effort of combing through massive amounts of files manually. Automating data extraction:

-

Improves decision-making: With a mainline of targeted data, you and your team can make decisions based on facts, not assumptions.

-

Enhances visibility: By identifying and extracting the data you need when you need it, these tools show you exactly where your business stands at any given time.

-

Increases accuracy: Automation reduces human error that can come from manually and repeatedly moving and formatting data.

-

Saves time: Automated extraction tools free up employees to focus on high-value tasks—like applying that data.

How to automate data extraction with Zapier

Making the most of your data means extracting more actionable information automatically—and then putting it to use. Here are a few examples of how Zapier can help your business do both by connecting and automating the software and processes you depend on.

You can use the Formatter by Zapier to pull contact information and URLs, change the format, and then transfer the data. Here are three starting points using Formatter:

True to its name, Email Parser by Zapier automatically recognizes patterns in your emails, parses text from them, and then transfers the text to other apps or databases. Here’s how you can start using Email Parser:

Using a tool like Wachete, you can scrape data from websites, monitor changes like prices and stock, and create an RSS feed from the data. Check out a few potential workflows:

To show a more role-specific example: CandidateZip can pull data straight from resumes as they arrive in your inbox or cloud storage app:

And here’s an example in action: Realty Trust Services, LLC, a real estate brokerage firm in Ohio, used Docparser to cut 50 hours of data extraction per month by automating utility bill scanning and having the data sent straight to a Google Sheet, which then automatically sent usage alerts. Here are some ways to use this kind of Docparser integration:

Last but not least, Zapier also created Transfer by Zapier—currently in beta. It allows you to move app data exactly where you need it, on demand. Explore the guide on how to move data in bulk using Transfer to learn how you can move leads, customer information, events, and more—without the cleanup duty.

Whether you’re crawling for user behavior, pulling contact information, or monitoring month-over-month ROI, your business is only as strong as your data. And when you automate data extraction, your valued human team members can spend more time doing valuable human tasks, so you can scale your business—or take the team out for a mid-day crab boil.

Related reading:

[adsanity_group align=’alignnone’ num_ads=1 num_columns=1 group_ids=’15192′]

Need Any Technology Assistance? Call Pursho @ 0731-6725516