The year 2020 witnessed raging Australian wildfires, a presidential impeachment, and the Covid-19 pandemic. Google Ads had some big changes this year, too.

Search Term Report Downsized

In September, Google Ads announced that it would show fewer user queries in its search terms report. Contributor Matt Umbro addressed the change in an excellent article, which included his findings that the revised reports omitted 37 percent of clicks.

Since then, I have seen much higher percentages — up to 75 percent of clicks — from hidden queries in accounts that I manage. Google asserts privacy concerns to justify the change. More likely, however, it’s a desire to conceal increasingly broad keyword matching. The only silver lining is that audits to identify negative keywords now take less time.

For advertisers wanting to retain highly granular insights, consider using Microsoft Ads search term report. Matt addressed it in his article, stating that Microsoft’s search terms report “provides the same information as Google, but Microsoft hasn’t shown any indication that it will be hiding queries. Use the queries found in Microsoft to add negative keywords and new positive keywords to Google.”

Lead Form Extension

Lead-generation ads are popular on social platforms such as LinkedIn, Facebook, and Instagram. Google Ads has joined the party with “Lead Forms.”

A click on this extension loads a form instead of sending the user to the advertiser’s website. Lead Forms are available on Google Search ads, YouTube ads, and Discovery ads, which appear across multiple Google properties.

Lead Forms can integrate with an advertiser’s customer management platform, streamlining the follow-up process.

Google Shopping: Free Listings

In April, at the onset of the pandemic, Google announced it would bring back free listings on Google Shopping. This was a boon to ecommerce companies.

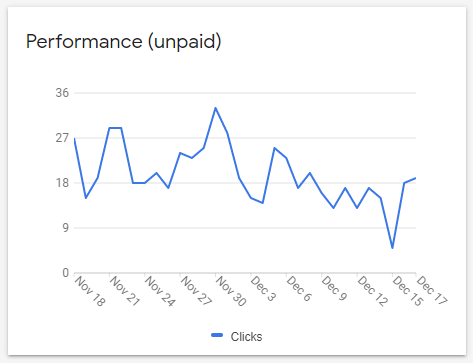

A report in Google Merchant Center shows how many clicks come from the free listings. However, more robust tracking (engagement, conversions) is available through Google Analytics by using custom UTM parameters to measure clicks on “Surfaces across Google.”

A report in Google Merchant Center for free Shopping listings shows only the number of clicks.

Emphasis on Automation

Seemingly every facet of Google Ads uses machine learning, such as:

- Responsive Display Ads require advertisers to upload multiple headlines (short and long), description lines, and images and logos in 1:1 and 1.91:1 aspect ratios. Google then dynamically creates ad units of various combinations.

Google’s reporting on these ads is limited with simple Low, Good, and Best scores on each element after about 14 days.

- Responsive Search Ads, similar to RDAs, require as many as 15 headlines and up to five description lines. Google then optimizes performance across various combinations.

My biggest beef with Responsive Display and Search Ads is minimal reporting. Google provides impressions for each element but not clicks or conversions. We know only that certain elements get used more than others.

Similarly, advertisers can see impressions and percentages for top combinations, which tell us only the frequency that elements are displayed together — not the performance of the elements or combinations.

My workaround in knowing click-through and conversion metrics is this. Use the top combinations as extended text ads and compare them against the RSAs. Then you’ll gain better insight into which copy is working.

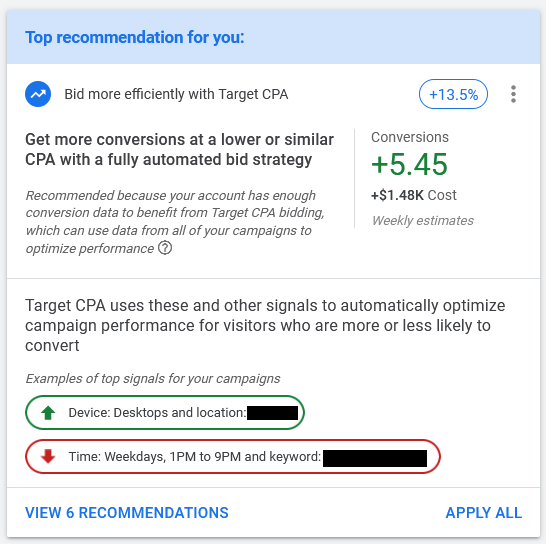

- Automated Bidding. Google suggests bid amounts in the Recommendations section, performance tabs, and even the ads editor.

Google Ads suggests bid amounts in the Recommendations section, performance tabs, and even the ads editor.

Matt Umbro has addressed automated bidding, offering pros and cons for each type. As he explains, accepting Google’s recommendations can hurt performance because they don’t consider internal metrics such as profitability or lead quality.

To test automated bidding, create an experiment in Google Ads and split traffic between automated and manual. Then see which performs better. Let the data inform your decision.

- “Smart” features. In Google Ad parlance, “smart” refers to machine learning, with little control on inputs, and sparse reporting. It can range from entire campaigns (such as Smart Shopping) to Smart Audiences.

“Smart” features can produce good results for time-strapped businesses. However, experienced advertisers will likely achieve better performance with hands-on management. The key is testing.