The CAP theorem informs how we build distributed systems by describing the trade-offs we must make when splitting a system into multiple distributed parts. I think the same holds true when splitting an engineering organization into multiple teams, and we have to make similar tradeoffs in how they make changes to their code.

The CAP Theorem for Distributed Systems

If you’re not familiar with the CAP theorem, it’s a way of thinking about tradeoffs when building distributed systems. It states that it is impossible for a distributed data store to simultaneously provide more than two of the following three guarantees:

-

Consistency: Every read request will receive the most recent write or an error.

-

Availability: Every request will receive a non-error response, but it might not be the most recent write.

-

Partition tolerance: The system will continue to operate even if parts of the system can’t communicate with each other.

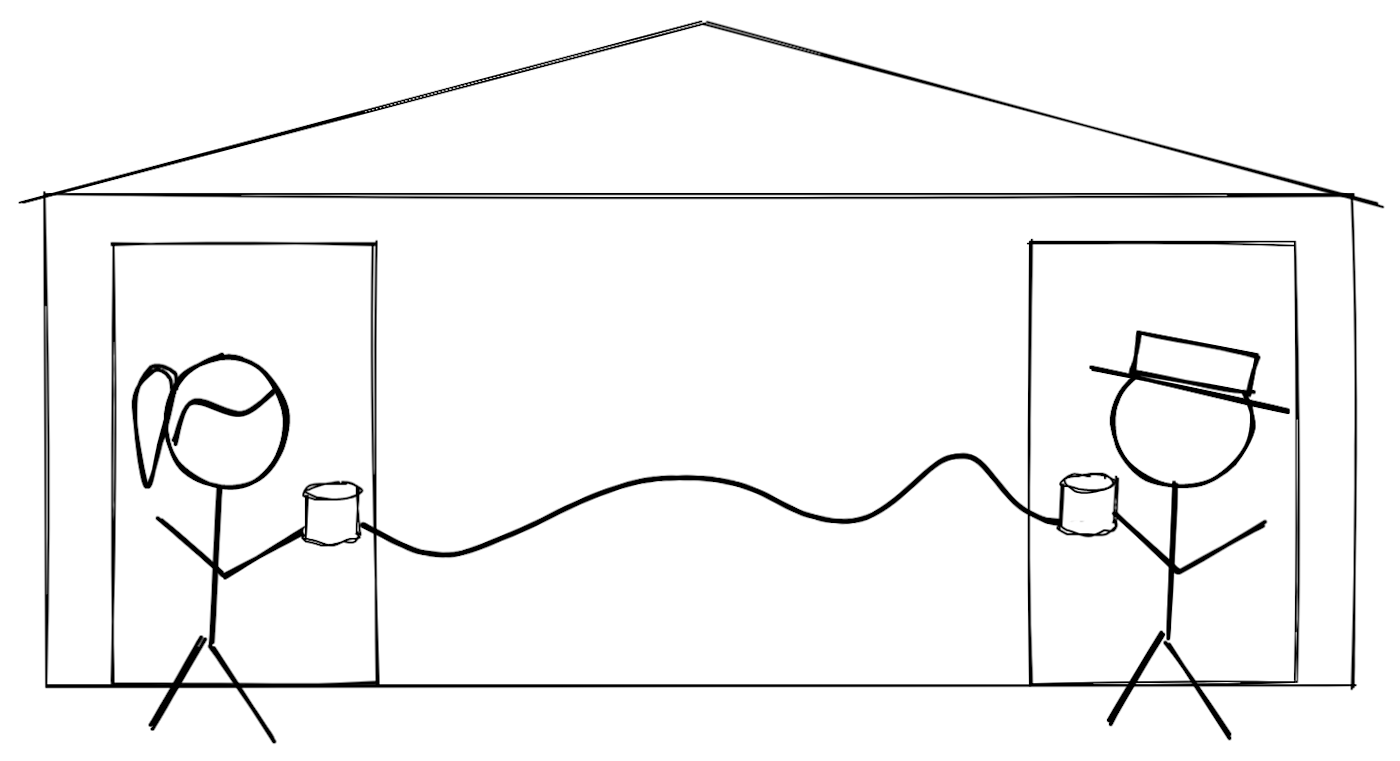

Talking about a “theorem” and “distributed systems” may make this sound complicated, but it’s fairly straightforward to reason about this. Let’s imagine a “distributed system” of two greeters working at two separate doors of a business. They’re counting customers as they enter the doors.

We’d like to be able to ask either one of the greeters the total number of customers that have entered the store today at any time. So we give each greeter a can phone, and any time one of them counts a customer, they tell the other greeter their total too.

Now, think about how to handle their phone line getting cut:

-

CA: If the phone line gets cut, we close the doors and don’t let anyone in until we can fix the problem.

-

CP: If the phone line gets cut, our greeters can keep counting, but we will refuse to give a total count until we fix the problem.

-

AP: If the phone line gets cut, our greeters can keep counting, and they’ll report the total as their personal count plus the last known count from the other greeter.

There’s no option to get all three of C, A, and P because if we’re allowing the system to be partitioned, we have to choose between a consistent or available count.

If you try to build any distributed system that has all three, you’re going to fail. Full stop. That’s why it’s often so difficult to choose the right data store, because on some level, products need all three of those. But it’s physically impossible. The only choice is to make appropriate tradeoff decisions and pick the two that are most important.

Applying the Theorem to Engineering Teams

As Zapier has grown, we’ve had to transition from a single team sharing a monolithic codebase to multiple teams owning independent codebases. We’ve often struggled with how much code to share in an effort to keep our developer and user experiences consistent versus decoupling codebases to allow teams to autonomously ship changes. That made me realize there’s a roughly equivalent CAP theorem that could inform the tradeoffs we make. (A colleague reviewing this shared an article to let me know that I’m far from the first to apply this concept to teams—and that you can easily apply it beyond engineering teams.)

-

Consistency: Teams will always use code that shares a common developer experience and ship code that provides a common user experience. However, they may sometimes have to wait on other teams if their code changes aren’t finished or don’t work as expected.

-

Availability: Teams will always continue working while waiting on other teams to finish or fix their code, but they may have different developer experiences, or they may ship different user experiences.

-

Partition tolerance: The company will continue shipping new code to users even if teams can’t coordinate their code changes.

If you have to pick two, you end up with these options for how you share code across teams:

-

CA: Code is in a single codebase shared by all teams. Tools and patterns can be applied consistently across the whole codebase. But a failure in a shared piece of code can break all other code, and a failure in a shared tool can affect the workflow for all teams.

-

CP: Code is separated into multiple codebases, but sharing code is encouraged to maintain a consistent user experience, and common tooling and patterns are enforced to maintain a consistent developer experience. As soon as a new version of a shared library is released, all teams must update their code to use that new version immediately. Any inconsistency is seen as a defect, and all other work must stop until the inconsistency is resolved.

-

AP: Code is separated into multiple codebases, and sharing code is discouraged so that teams are always able to ship their latest changes. It is difficult to maintain shared tooling that works across all codebases, and user experience may sometimes be inconsistent. But teams are never blocked waiting on changes from other teams, and when libraries are shared, they are free to upgrade on their own schedule.

Now, there’s a big caveat here. Note that the CAP theorem is for distributed systems. Unfortunately, one of these options is not actually distributed. A CA system is something like a traditional database. A traditional database has some really nice qualities like being able to easily join data together with foreign key relationships and supporting transactions so that you can atomically update a bunch of data all at once. But a traditional database is not a distributed system. Yes, there are read replicas and hot standbys, but there are inherent scaling limits. Eventually, you pick the biggest database instance with the most CPUs and the most memory and the biggest disk, and you have no choice but to start splitting up the database. You also have to be really careful about bad queries. If your whole business is running on a single database, then one bad query for a low-value app can kill the performance of the whole database and thus kill your high-value apps and your business with it.

Trying to violate the CAP theorem for systems at scale is generally a recipe for failure, and the same is likely to be true for engineering teams. As an engineering organization splits into multiple teams, they often operate with a CA model, because like a traditional database, that’s the easy thing to do. And it is the right thing at a certain scale. A single traditional database is almost always the right solution on day one! But at some point, you outgrow a single, traditional database. In the same way, at some point, you outgrow a single, traditional codebase. Your choice then boils down to:

-

Fight it. Assume that you can keep building on a single shared codebase. This is exactly like throwing more CPUs and memory and disk at a traditional database. It will work up to a point. But the longer you wait, the more pain you’ll feel trying to bust up all those foreign key relationships. With code, the longer you wait, the more painful (and time-consuming) it will be to break up all that code.

-

Exercise plenty of authority (from an architecture team, formal guilds, management, strict process, and change management, etc.) to keep things consistent across teams. Sacrifice some autonomy for more consistency. Engineers will be happier that it’s easy to absorb system-wide context, but they’ll be frustrated when they can’t independently choose a solution they think is appropriate.

-

Give up on consistency. Let teams develop their own solutions at their own pace. Allow duplication of code and out-of-sync versions to prevent teams from being blocked. Engineers will be happier that they can choose their own path, but they’ll be frustrated when they look at all the seemingly weird decisions that other teams are making. Central teams (Infrastructure, Security, Architecture, etc.) may be especially frustrated trying to support all those variants.

Just like there’s no single right option when picking a distributed data store, there’s no single right option when picking a model for teams. But you have to pick a model so you know what tradeoffs you’re making.

Note that all the limits we’ve identified for code apply to other engineering artifacts as well: documentation, processes, decision records, etc. Anything that teams are trying to create and use across the organization is bound by the same constraints.

One weird trick to cheat the CAP theorem

Although I would advise against fighting the CAP theorem, there is a simple hack to cheat a bit. You don’t have to make a single decision for every team and every line of code. Just like you can choose different storage solutions with different tradeoffs for different parts of your product, you can choose different solutions for your teams and their code.

You may want to apply a lot of authority when it comes to your identity solution, for example. You probably don’t want your customers to have multiple logins to different systems. You probably don’t want them to have weird glitches where their profile is out of sync. Similarly, you may want a fairly consistent UI for your users. You probably don’t want your teams switching the colors for primary and secondary buttons, since that would be incredibly confusing for your customers.

By contrast, you may want to avoid having every team restricted to using the same version of React since that forces them all to do a slow upgrade in lockstep. Likewise, you may want them to be able to pick unique AWS services for their systems since they are likely to understand their needs the best and ultimately need to be able to support their systems.

The CAP theorem simplified

If you want to dig more into the system side of things, there’s a great post, You Can’t Sacrifice Partition Tolerance, that explains in further detail why a distributed system can’t disregard partition tolerance. It also presents a simplified model which is essentially blessed by Eric Brewer who originated the CAP theorem. That simplified model focuses on two things: yield and harvest.

Yield: The percentage of requests that return some kind of result, even if they might sometimes be incorrect. You might increase the yield by returning the latest known version of a resource (from available nodes) without actually guaranteeing it’s the latest version (because some nodes might be unavailable).

Harvest: The percentage of each result that is actually correct. You might increase the harvest by returning an error if you can’t guarantee you have the latest version of a resource.

In other words, your system can do one of two things:

-

Always answer at the cost of possibly being incorrect, i.e. optimize for yield.

-

Always be correct at the cost of sometimes having to refuse to answer, i.e. optimize for harvest.

This is roughly consistency (C) and availability (A), but there’s a bit more wiggle room. For example, you can be unavailable as long as there are no requests when you’re unavailable, and your yield is unaffected.

So for teams and their code, this all boils down to two choices:

-

Always be able to ship new code at the cost of possibly using inconsistent code.

-

Always use consistent code at the cost of sometimes having to refuse to ship anything.

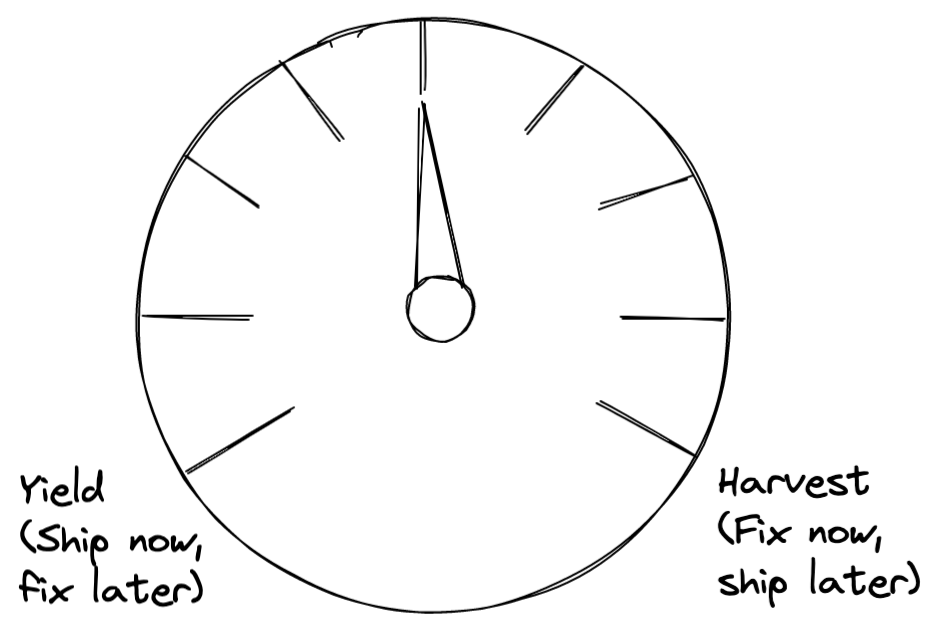

Again, you don’t have to always make the same choice! Think of this as a knob that you can turn to optimize for one or the other.

Turn it to the left to optimize for shipping your changes now, knowing that you may have to fix things later. Or turn it to the right to optimize for making sure things are consistent before you ship them.

Your company culture and values will probably default you one way or the other, but a particular project or part of the codebase may require you to lean the other way. For example, login or billing pages may have to optimize for harvest, because a security or billing problem could be incredibly costly. Your blog or a new product may instead optimize for yield and experiment with new technology and branding first. The most important thing is to be explicit about which choice you’re making and why you’re making it. You don’t want to just randomly turn the knob back and forth.

Who is this for and how can you use it?

Hopefully, most of this article is understandable by anyone, but it leans a little technical and so is mostly intended for engineers and engineering leaders in a growing engineering organization. So if you fit one of those roles, what do you do now?

If you’re an engineer, hopefully this helps explain some of the growing pains you may be feeling. You might share this with a manager or other leaders, but most organizations will naturally try to beat the CAP theorem and want to have completely independent teams that are all shipping completely consistent code. In fact, engineers themselves may want to have everyone using the same tools and to have a nice consistent codebase, but they also want to have complete freedom to use whatever they prefer. Humans are like that.

So next time you find yourself being asked to ship consistent code fast, or you want everyone to be independent as long as they do it your way, try to stop for a minute and ask about what tradeoffs make the most sense. I wouldn’t recommend talking about “yield” and “harvest” unless you can get everyone to read this article, but you can talk about whether to take the time to collaborate on a new API or to ship quickly and collaborate later. Or you can make an informed decision about whether or not to create a particular shared library and how to handle upgrading it.

If you’re an engineering leader, then I’d recommend thinking about this as you help structure your growing engineering organization. If you want to optimize for independent teams that can ship things as fast as possible, you probably want to create cross-functional teams with clear ownership over isolated products or parts of products developed in separate codebases. If you want to optimize for consistent user experience or developer experience, you’ll need strong central teams like a design system team and a developer tools team and proper incentives to leverage them, and you may want to foster a culture that values high quality shared code. If you want to compromise, you’ll need to be clear about how to make those compromises; otherwise, every decision will have to be escalated to central leadership which means teams aren’t really independent at all.

Most organizations will have to compromise to some extent, and as they grow, they’ll bounce the knob back and forth trying to find the right spot. This will cause some confusion and frustration, but just think of it like any other growing system where you’re being forced to make new tradeoffs. There’s no panacea, and mistakes will be made. Hopefully, awareness of the problem will help!

[adsanity_group align=’alignnone’ num_ads=1 num_columns=1 group_ids=’15192′]

Need Any Technology Assistance? Call Pursho @ 0731-6725516