This piece was written by the members of Zapier’s Team Monitor. Contributors include Michael Sholty, Mike Pirnat, Randy Uebel, Ismael Mendonça, Chelsea Weber, and Brad Bohen.

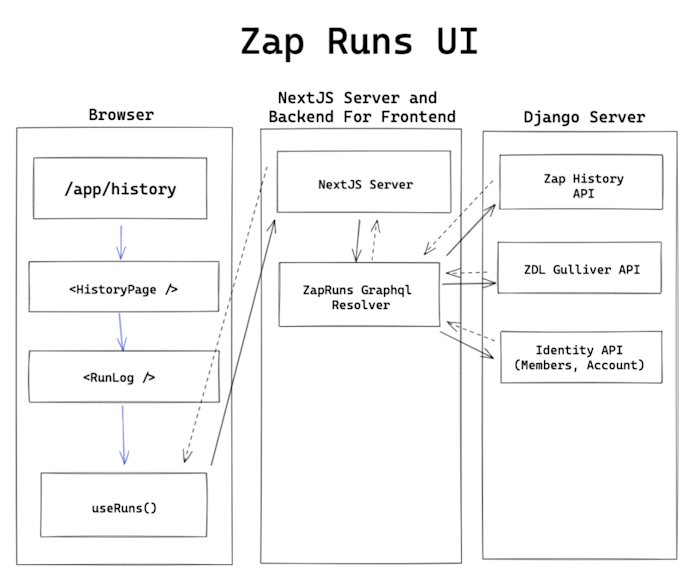

Whenever a Zapier user wants to review what Zaps have run in their account, they visit their Zap History. Built on Next.js, the architecture that supports the Zap History pages, surfaces individual information about millions of Zap runs to our users.

Learn about how we’ve categorized the parts on the frontend and backend to provide this dynamic data.

Frontend

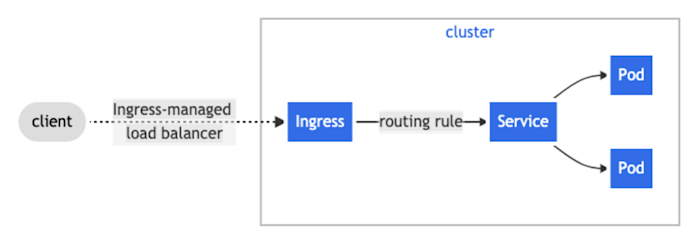

When you visit your Zap History page you access our Next.js service that serves the Zap History user interface (UI). We currently deploy our Next.js service to our Kubernetes cluster which has an Ingress routing rule to route to our service’s pod.

We’ve been using Next.js in some capacity at Zapier for years, but recently we’ve begun separating our UIs into individual services to give teams more control and flexibility to release and maintain their code independent from the rest of Zapier’s platform.

Next.js is a framework that helps us manage our frontend infrastructure by abstracting tools such as Babel, TypeScript, and Webpack and letting us configure major features with only one next.config.js. Our team focuses our time on delivering products to our customers, so we want to offload as much time and energy as possible in configuring our framework.

Data Retrieval

We use Apollo Client to manage our state and data retrieval.

The basis of our application comes in the data retrieval. We abstract each query we make with a React hook:

With this hook, we pass in the filters that our users configure on Zap History and get back the list of runs to display on the page. Under the hood of this hook is an Apollo useQuery call that queries for runs.

Filters are managed through URL parameters on the page. This acts as pseudo-state management, and gives our users a few extra features in the process:

Backend for frontend

We use Apollo Server in conjunction with Next.js API routes to orchestrate the data from our Zapier APIs to our frontend. This allows us to offload complex logic to our backend so our frontend can focus on rendering our UI. Offloading this code to our NextJS server also reduces our bundle sizes, saving our users’ browsers from having to run it. We call architecture a “backend for frontend” (BFF for short) because it acts as a backend as far as the browser is concerned, but is maintained by the team’s frontend engineers. Because of the out-of-box compatibility with Apollo Server and Apollo Client, as frontend engineers we can quickly write backend code that suits our frontends.

We really enjoy using Apollo in conjunction with TypeScript because we generate types for every query we do, so the data fetched in our frontends can be strongly typed across our codebase. We don’t have to maintain two sets of types between our frontend and backend this way. Apollo fortunately ships a CLI that generates types when given a schema, so it was very easy for us to adopt that into our regular workflow.

Backend

Zap History consists of two application components:

-

Django web application with a Django Rest Framework API

-

A Python daemon that runs as a Django command.

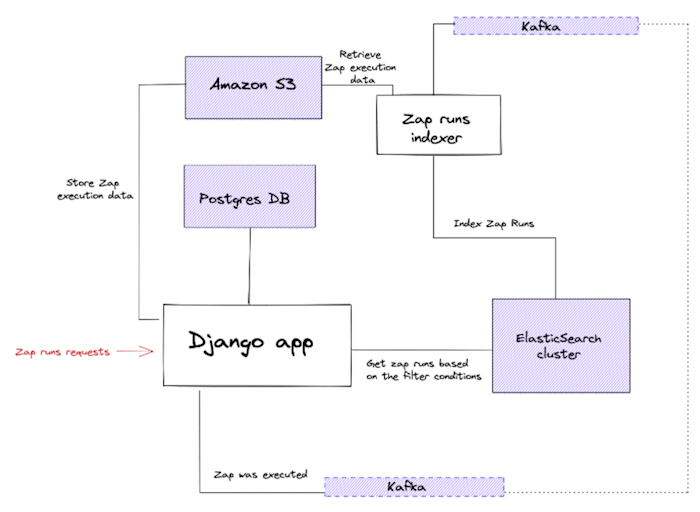

In terms of data stores we use a PostgreSQL database, an Elasticsearch cluster, and we also make use of Amazon S3 for Zap runs data. At Zapier we also have a Kafka cluster that works as the backbone for communication between our service architecture.

The following figure summarizes the current architecture of our Zap History application:

The way your Zap runs are produced goes as follows:

-

Every time a Zap is executed, its result is stored in Amazon S3, and an event is emitted to our Kafka topic with enough information to be able to process a Zap execution result (Zap run).

-

The events are consumed by a separate service called “Zap runs indexer.” The indexer will consume a batch of events and download from S3 part of the Zap run data associated with the events in the batch. We use the indexer to separate the Zap run data processing from the main Django application that handles the API, and S3 since a Zap execution can contain a lot of input and output data that would be too heavy to send as part of an event.

-

The Zap run data is processed and indexed in bulk into our Elasticsearch cluster.

Every time you go to the Zap runs tab in the Zap history section, this is what happens:

-

The browser will perform a request to our Zap History application endpoints, this request will include any filter conditions you have chosen, as well as your account id and your user to be able to identify your runs.

-

We process the request parameters (filters, account ID, and user) and retrieve the Zaps involved in the Zap runs from the Postgres database.

-

We use part of the filters and the Zaps retrieved in the previous step to query Elasticsearch for your Zap runs. We use Elasticsearch so that you can also look for Zap runs by using free text searches.

-

The retrieved documents with Zap data are parsed in the Django application and returned as the API response.

Looking at our backend infrastructure, the Django application and the indexer daemon both run in a Kubernetes cluster, whilst everything else is running on Amazon:

-

Amazon RDS for our PostgreSQL database.

-

Amazon MSK for the Kafka cluster.

-

Amazon EC2 to host our Elasticsearch cluster deployment.

Hopefully, this bird’s eye view of our architecture helps you understand how we put together the Zap History experience, from UI to API to database. In future articles, we’ll share similar dep dives into the solutions we’ve found to interesting problems in this space.

[adsanity_group align=’alignnone’ num_ads=1 num_columns=1 group_ids=’15192′]

Need Any Technology Assistance? Call Pursho @ 0731-6725516