Separating fact from fiction in SEO can be really difficult.

In a previous post, I looked at the validity of five popular rumours from across the search industry. In this follow-up, I focus on analysing the real impact of another six similar claims that are regularly doing the rounds.

Rumour 1: Mobile indexing changes everything

Desktop is no longer the single source of truth for Google indexing. Demonstrating this, I saw an example where a company’s desktop search results showed the same ‘jump marks’ (links to subheads within content) as on mobile search. The trouble was, there was nothing associated with these subheads on the desktop version of their site, perplexing the company (and myself). So, in this case, Google was indexing the client’s mobile site – including the links that were present – and showing it on desktop too.

You, therefore, need to act accordingly. Most SEO teams still run tests on desktops; but if Google is crawling your mobile version, you need to run everything – all your tests and all your health checks – on mobile too, preferably on a real smartphone to get the full experience.

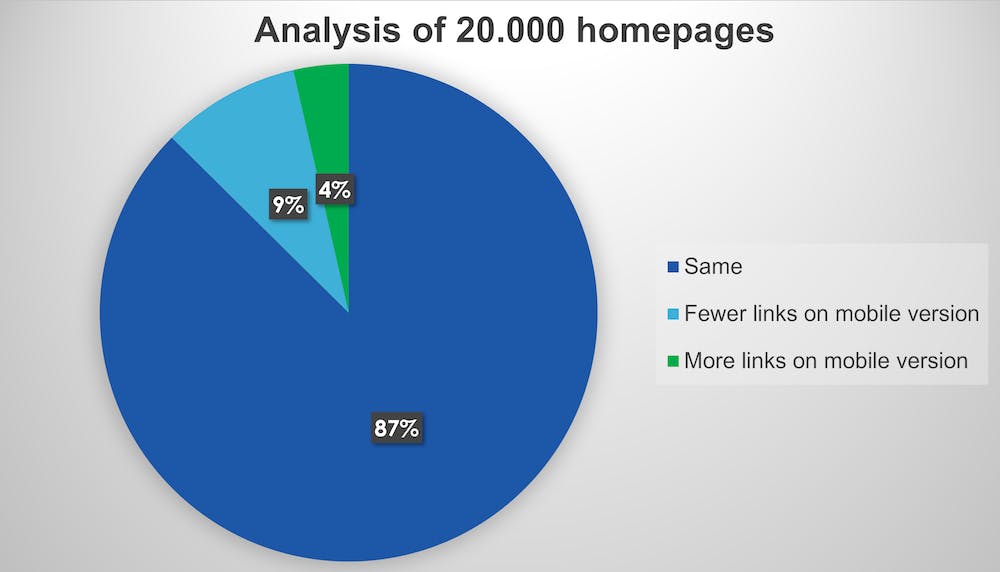

There are also significant differences in the numbers of hyperlinks between mobile and desktop. A study from Moz crawled 20,000 homepages and checked if home page links were the same between the two. Thirteen percent were different and – when crawling in more depth – 63% of external links turned out to only exist on desktop. This means you might have created a lot of desktop backlinks that don’t exist or help your rankings on mobile. Again, check and act accordingly.

Rumour 2: Ranking factors are dead

It is easy to draw the wrong conclusions when analysing SEO best practices. Take the average length of articles. At Searchmetrics we looked at the word count for articles that ranked in the top 10 for Google results. The average across a range of different topics and keywords was 1,692 words. Does that make writing nearly 1,700 words best practice?

Dig into the data and the answer is clearly “no”. If you split it by industry it varies greatly – from 700 words for camping up to 2,500 for financial planning.

The same point applies to all ranking factors – there are some industries or searches where a factor has a high correlation with search position and others where it doesn’t. For example, when I search for “buy trainers” online, seeing lots of small images is probably good as it helps me make a choice faster. However, if I search for “buy Air Force One Black size 6” it makes more sense to show a couple of large pictures as my intent is clearer. Google understands this, meaning that different factors will be important depending on the intent of the search.

Rumour 3: BERT changes everything

Bidirectional Encoder Representations from Transformers, or BERT to its friends, is Google’s Natural Language Processing algorithm that was rolled out in September 2019.

I’ve heard so many rumours about BERT – the worst ones being that it is related to schema and that URLs get a BERT score, which influences how they rank. Both are simply untrue. If you check on third-party tools, you’ll see little SERP fluctuation in the week BERT was announced. Actually, you will not even find agreement among SEOs on the exact date that BERT was – supposedly – rolled out.

The reason for this is that BERT’s actual focus is on recognising words and looking at their relationships and using that to understand the searcher’s intent more accurately. Take a search for “train from Leeds to Liverpool.” In the past, if you had the phrase “fly from Paris to London and then take a train to Liverpool with no stop in Leeds” on your website, you could have ranked for the search – because you had all the individual words present. Now BERT recognises that there is a relationship between the individual words.

So, the only rankings you would lose because of BERT are those where you didn’t really fulfil the search intent. Yes, BERT is a big change for Google in terms of how it processes queries and language but not a huge update in terms of ranking.

Rumour 4: You don’t get traffic from Google anymore

People have been telling me this for 10 years. What you can see is that for an increasing number of basic informational questions (for example “How old is Boris Johnson?”) Google responds with a Featured Snippet or Direct Answer. In fact, my research shows that Google will now answer nearly half (49%) of questions with either an Answer Box (sometimes referred to as a Direct Answer) or a Featured Snippet.

So, this will hit your traffic, but only if your whole business model is based on answering simple questions with simple answers.

Rumour 5: Usability is important

I touched on usability in my previous blog post when discussing the importance of speed to ranking well for particular keywords. Essentially if you analyse Google Lighthouse tests (the search giant’s own set of automated tests that webmasters can run on their websites) you can see that scoring well for factors such as “Does not use unreadable fonts” is clearly correlated with high rankings:

And other Lighthouse audits also backup the correlation of usability factors with rankings.

Also, when we analyse what happened after Google’s Core Update in March 2019, the data suggests that the search algorithm increased its weighting on user signals when calculating rankings. For example, websites that improved their search performance following that Core Update had higher values for time-on-site and page-views-per- visit, and lower bounce rates than their online competitors.

Simply put: user signals are some of the “hardest” ranking factors. If searchers are spending more time on a site, opening more pages per visit and bounce less often back to the Google search results, then the page must be doing something right.

So, to succeed in SEO, build websites with great usability.

Rumour 6: Content must be updated

I’m going to finish with what I think is the best SEO advice that I can give anybody – keep updating your evergreen content, rather than publishing new versions.

You probably know that evergreen content is that content that revolves around topics that are always going to be important and relevant to your target audiences. It covers questions that searchers are always going to want answers to.

Of course, even evergreen content will need to be adapted and evolve as things change. But the minute you delete those pages and create new, more up to date content, you immediately lose all the momentum, deep links, and authority they’ve built up with Google. Stick with updating the pages that are already there and you will continue to perform well.

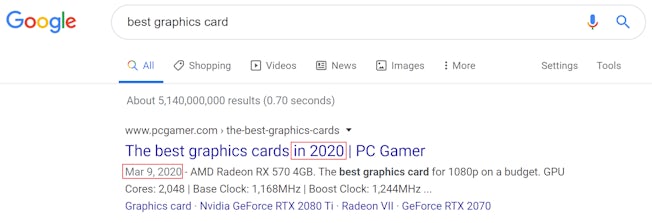

A perfect example of this is what you see when you search for “best graphics cards”. You see this result coming from PC Gamer with the words 2020 and dated 2 January.

But if you go to the website and look at the comments on the page, they date back five years! It is evergreen content that has been regularly updated since then. Not only has the article continuously ranked in the top 5 of Google for the search “graphics card” but it also ranks for 3,798 other organic keywords in our database such as “best graphics card” and “best gaming graphics card”, delivering thousands of visitors that would cost thousands of dollars to bring in via AdWords.

This shows that updating evergreen content again and again works really well – so think before you publish a new article on a particular subject – can you simply adjust an existing piece?

Hopefully, this analysis will help you focus your SEO efforts and drive the right results for your business – based on facts rather than the wilder rumours circulating across the industry.